A Reddit post by a student at Singapore’s prestigious Nanyang Technological University (NTU) has ignited a storm of debate about how artificial intelligence (AI) should be used in higher education. The student revealed she was accused of academic misconduct for using a digital tool to alphabetize her citations—an action deemed by the institution as potentially violating rules about the use of generative AI like ChatGPT.

What initially seemed like a minor administrative misunderstanding turned into a viral discussion, raising serious questions: Are universities keeping up with the AI era, or are they punishing students for adapting faster than policy allows?

What Really Happened at NTU?

According to the original Reddit post, the student used a citation sorter for her term paper. While this wasn’t ChatGPT or any generative AI used for writing or brainstorming, NTU flagged it after spotting a few typos and launched an academic investigation.

Soon after, two other students stepped forward with similar experiences. One was penalized for using ChatGPT during the initial research phase, although she claimed not to have used AI-generated content in the final paper.

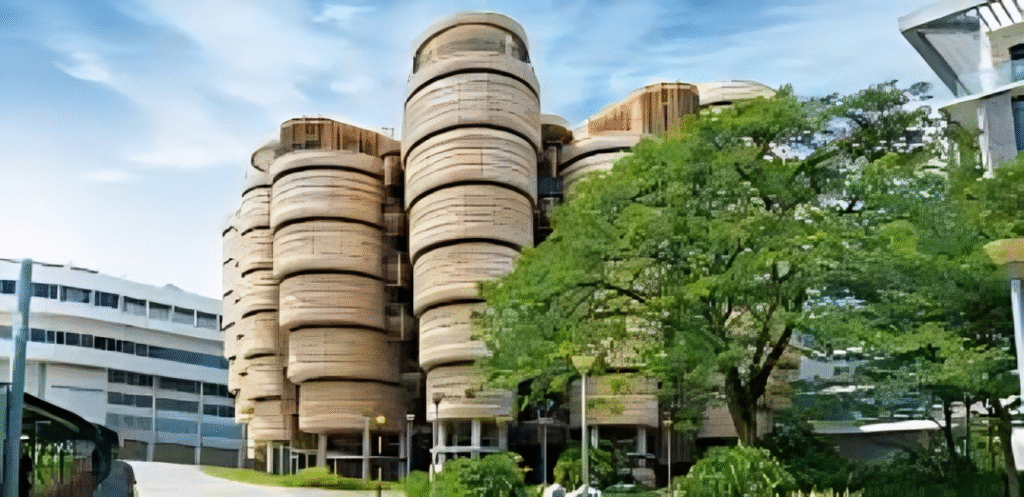

Initially, NTU defended its zero-tolerance policy towards AI tools in academic work, despite marketing itself as a forward-thinking institution that embraces AI for learning. However, after the post went viral and sparked support from thousands online, the university reversed the decision, removing the academic misconduct label from the student’s transcript.

The Global AI-Education Conundrum

While the NTU saga is uniquely Singaporean, it touches on a much bigger issue: no one — not students, teachers, or policymakers — truly knows what responsible AI use in education looks like.

In a world where 84% of Singaporean students (as per a local media survey) reportedly use ChatGPT or similar tools weekly for homework, drawing clear lines between cheating and innovation is no easy task.

The controversy also reflects growing global confusion, particularly in Asia where academic rigor is deeply tied to social mobility and national success. Countries like China, South Korea, and Singapore have long been praised for their competitive academic environments, but AI threatens to rewrite the rules.

China’s AI Cheating Crackdown: A Case in Point

In China, many universities are already using AI-powered cheating detection software. These tools analyze students’ writing to spot patterns of AI generation. But the technology is far from perfect. Social media reports suggest some students are “dumbing down” their vocabulary and sentence structures just to fool the detectors. Others are even paying for access to detection tools just to pre-check their work before submission.

Rather than cultivating better thinking, this arms race encourages surface-level compliance over deep learning.

The Risk of Creating Guinea Pigs

Despite lofty promises from edtech companies — calling this “the biggest transformation in education history” — there’s a lack of long-term data on how AI tools impact learning outcomes, memory retention, and cognitive development.

Studies remain inconclusive. Most academic research on AI use in learning is still in early stages or focused narrowly on productivity gains, not holistic educational impact.

This raises a concern: Are we turning an entire generation of learners into experimental subjects? The desire to modernize classrooms should not come at the expense of students’ intellectual development.

What Educators Should Focus On: Learning Over Policing

Experts like Yeow Meng Chee, provost of the Singapore University of Technology and Design, argue that education must evolve alongside technology—not fight it. The goal shouldn’t be to ban tools like ChatGPT, but to understand and teach students how to use them responsibly.

This means:

- Emphasizing the learning process over the final product.

- Encouraging transparency about tool use.

- Teaching AI literacy as part of academic integrity.

- Reinforcing human judgment to interpret AI-generated results.

After all, tools like ChatGPT are not always accurate. They can hallucinate facts, misquote sources, or create biased interpretations. Teaching students to critically evaluate such outputs is a 21st-century skill.

The Irony: AI Use Is Encouraged in the Workforce

The same AI tools flagged in classrooms are welcomed in the workplace. Tasks like citation management, data sorting, and initial research are often delegated to AI-powered software in modern offices. Penalizing students for using tools that boost productivity risks widening the gap between education and employability.

According to the World Economic Forum, by 2025, over 50% of all employees will need reskilling, much of it around AI and digital tools. Universities that ignore AI adoption may leave their students unprepared for real-world demands.

Eastern Railway Recruitment 2025: Opportunity for 10th, ITI Pass